As the COVID-19 pandemic cut its destructive path across the globe, it left no area of our lives and livelihoods untouched. From seemingly trivial everyday details to macro-scale policies, everyone is affected by its consequences: not just from the risk of infection itself, but from the restrictions imposed by prevention. Social distancing and a widespread transition to teleworking means we increasingly interact with the physical world through what is, in essence, reality’s “digital twin,” often using cameras to facilitate digital representation of the physical world.

Enterprises and cities are at the core of the economic recovery, and they have an urgent need for tools and technologies to help them with reopening, compliance, cleaning, and prevention efforts. Fujitsu is developing smart city video analytics for public safety applications, to serve as pandemic response aids. These video analytics applications use the Internet of Things (IoT) to enhance physical public safety measures such as face masks and social distancing—without the additional health risks of, for example, assigning security guards or police officers to be physically present in public places.

Contactless Video Technology Monitors Social Distancing

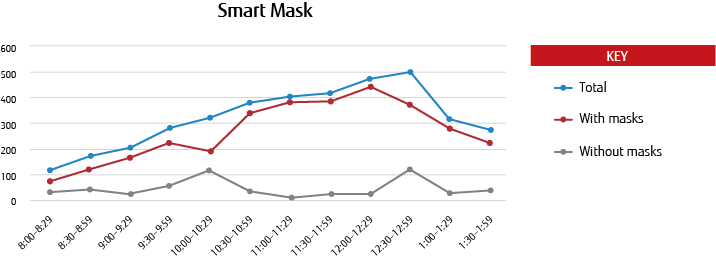

Fujitsu smart city video analytics for public safety applications are contactless video-based solutions that offer a cost-effective way to help businesses and municipal facilities safely re-open. One example is our “Smart Distancing” application, which detects instances when people are within six feet of each other using networked video technology. Identifying these close-contact hot-spots allows organizations to make physical adjustments to their facilities, especially during the initial re-opening phases. “Smart Masks” is another application that identifies how many people are wearing masks.

In some cases, a simple posting or audio alert that discourages people from lingering in an area is enough to drastically reduce the number of people who come into close physical contact.

Remote video monitoring of popular locations in a city can assist city staff in addressing the public’s concerns regarding social distancing compliance, without overburdening the police force with requirements to physically attend to each area of concern.

Restaurant chains can also use the video app to monitor occupancy and social distancing compliance remotely. In addition, they can validate that after customers have left, cleaning crews have disinfected tables and seats.

Smart city video analytics for public safety applications can use existing IP based video camera streams to process the images. If a camera infrastructure does not exist, the cost of building it is lower than hiring extra security staff.

The advantages of video-based applications over WIFI/BLE or smartphone apps is that the same video infrastructure can be used for multiple applications and does not require opt-in from users to become useful. In addition, the stored videos provide the ability to go back in time and provide contact tracing on the stored data or perform post diagnosis.

Classification, Detection, and Segmentation

Video analytics applications use classification, detection, and segmentation to identify objects in an image.

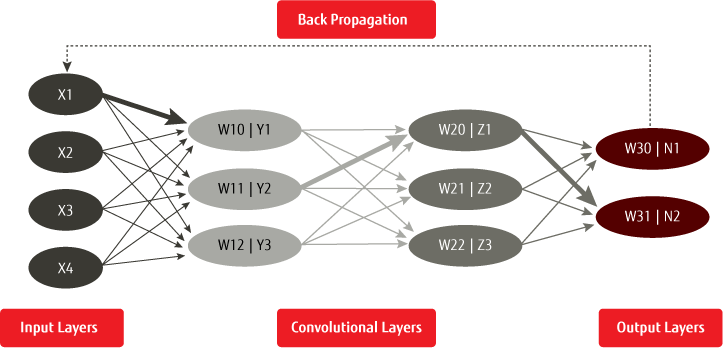

During classification, the algorithms decide what things are in the picture and label them. Algorithms can classify objects based on the models that they are trained in. For everyday items, models are readily available. For specialized applications, we build models from large data-sets. Convolutional Neural Networks (CNN), for example, are effective for image recognition and classification because they are good at recognizing patterns. CNN uses multi-layer perception where data and its weights are fed from one layer to the next. During the prediction phase, the known input and output are used to “learn from mistakes.” A Back propagation algorithm compares the predicated output and propagates back the error to the previous layer and adjust the weights that are used. This process is repeated until the errors are below a set threshold.

Classification by itself is not very useful, because images usually contain multiple objects that interact with each other. During the detection phase, multiple objects in the image are identified and their locations are determined. The level of accuracy is also determined during the detection phase. Regression-based algorithms divide the image into multiple scenes and run the detection algorithms in parallel.

During segmentation, larger objects are divided into their individual component objects. By dividing the larger objects into smaller objects, we obtain a more granular understanding of each . For example, in addition to knowing there are a number of people in a scene, we can identify where one person ends and where the next person begins.

The speed at which we are able to recognize objects in an image has increased from 20 seconds to 20 ms during the last 10 years. This increased speed is enabled by availability of GPUs and faster algorithms. We are now able to detect objects in real time from cameras in addition to video file and pictures.

As we provide remote access to the Statistical data and Camera Images, users will be able to make informed decisions regarding visiting a location (ie Parks, Restaurants, Stores,…) at different times of the day.

In addition to the graphical view, we can provide statistical data through open APIs. Integrating data from our application to higher-value applications will provide end to end visibility to the daily operations to improve efficiencies. Video analytics applications can be used in cities, enterprises, college campuses, airports, hospitals, and any other places that brings large numbers of people together.

Cities and enterprises with private 5G networks can take advantage of the edge compute servers to build a wireless video infrastructure. Running video analytics on the MEC (Multi-Access Edge Compute) will reduce the video traffic through the core network and allow outdoor multi-camera solutions to become economically feasible.

Note: The author would like to thank Nannan Wang, Paparao Palacharla, Jon Kokko, and Xi Wang for their contributions to this article.