Well-connected clouds with frameworks architected for hyperconverged digital infrastructure create a critical need for network intelligence in Layers 0-3. Insights and remediation related to slowdowns, degradations and failures in remote network elements inevitably cascade into major usability problems at customer endpoints, setting off alarm storms all along the way. As a result, modern applications running on those endpoints are highly dependent on extreme visibility into the network and its connections, supporting compute elements and storage. Communication Service Providers (CSPs) need specialized databases, AI, and analytics tools to pinpoint, correlate, and fix root causes before they severely impact end-user experience.

Time-Series Databases Support Real-Time, Data-Driven Business Decisions

Time-series database technology holds the key to the kind of muscular intelligence and analytics needed to support top performance on hyperconnected cloud networks. Time-series databases, as the name suggests, track measurements and events over time. They are able to analyze change, trend, seasonality, cycles and fluctuations, which makes time-series databases uniquely powerful in a network context. Time-series databases thus offer enormous potential for CSPs; beyond building customer loyalty through improved service quality, the ability to develop new revenue streams and protect annuity business creates an urgent need for a more agile, responsive infrastructure.

Metrics on a New Dimension

The potential for time-series analytics extends far beyond network performance monitoring and diagnostics. One area where time-series databases offer abundant new opportunities, for example, is the Internet of Things (IoT). Before long, everything imaginable will have an IOT sensor that constantly streams operational metrics and time stamps: fitness devices; appliances; groceries, mobile phones; utilities; homes and businesses; factories; smart cities; all types of transportation; and more. To utilize all these sensors in real time decision-making, it is necessary to capture granular performance metrics across all systems. Using time-series database technology, CSPs can help their customers capture and monetize these new sources of data, in addition to expanding networked data sources they already have access to. This will be a crucial differentiator for new B2B and B2C communications service offerings.

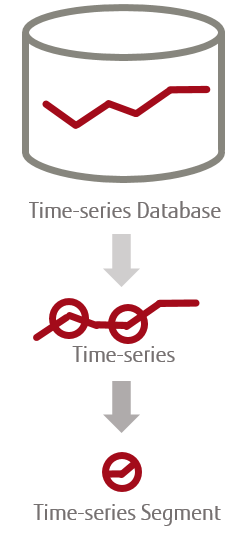

Time-Series Databases Measure and Analyze Changes Over Time

A time-series database is optimized to measure changes over time. It handles time-series queries capable of scanning millions of records in milliseconds. Time-series databases include time stamp data compression and storage, data summaries, and data lifecycle management. Types of data can include

- Network traffic metrics or application performance measurements that trigger application reallocation

- Zed zone memory utilization that deploys additional compute resources

- Network events triggering changes to centralized path computation, dynamic restoration, or spinning up a new network-as-a-service instance.

By tracking changes over time, digital experts in runtime operations can help plan capacity increases, isolate problems faster, and even pinpoint updates that create a poor user experience.

For example, a time-series database might leverage any of this data using a data summary request that queries percentage increases for seven days over the same period in the last month, summarized by week. The time-series database does this by inserting a new timestamp reading each time data is collected, rather than overwriting the previous timestamp in the record. While an updated timestamp provides the system’s current state, only a time-series database allows users to instantly analyze changes over time. Relational and transactional databases require external capabilities for data lifecycle management to perform this type of analysis. With a time-series database, this functionality is available out of the box.

The Power of Measuring Time on its Own Axis

Time-series databases produce a type of streaming data that harnesses the power of time on its own axis, enabling data analytics on a whole new dimension. These databases create a series of dynamic, related, and interdependent segments that can be analyzed and acted on as needed. In simple terms, this means a series of sequential time stamps, tracking all sorts of changes – over seconds, hours, days, weeks, months, or years – that can be collected and analyzed. A purpose-built database ingests and dispositions the data streams of time-series workloads. Financial and business forecasting, weather prediction, self-driving vehicles and automated manufacturing are all areas where processing large amounts of data over time offers particularly compelling benefits.

Data Analytics that Identify Hidden Opportunities in Any Dataset

Time-series databases and native tools include other digital expertise for time-series data analysis, including continuous queries, time aggregations and down sampling, events replays, data retention policies, and more. Analyzing trends at the data layer helps identify hidden opportunities in any dataset, no matter how big it is. While storing and analyzing a large amount of unstructured data can be challenging, these databases have distinct capabilities that make it possible to query enormous amounts of data. Advanced search tools leverage time-series databases at scale, rather than offloading, aggregating, normalizing, and analyzing data in an excel workbook or a third-party business analytics application. A time-series database also accommodates applications’ growing time-series data stores, reducing processing overhead, latency, and performance impacts within the application itself.

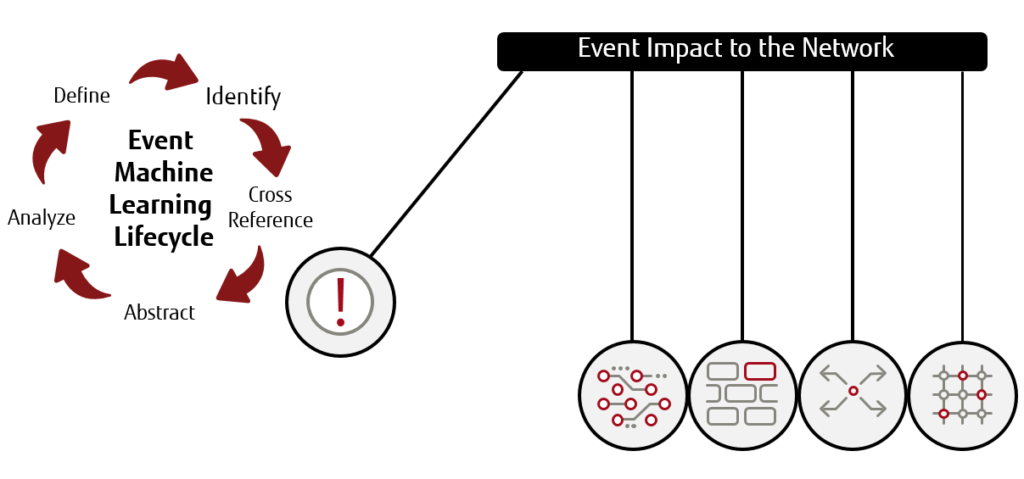

When customers use mobile applications to shop, pay bills, and service their accounts, a time-series database, together with log query tools like Elasticsearch, can deliver hundreds of data points on the back end. DevOps can use these data points to improve customer engagement. That includes personal account information, device type, what the application is doing when, what services are running, and how they affect the customer experience in real-time. Complementary partner services can be served up dynamically, alongside organic services, with standard dashboards that surface small clusters of performance issues. Some time-series databases can integrate with third-party visualization tools, creating custom dashboards and graphs with a few clicks. For example, when analyzed with machine learning and artificial intelligence, slow page load times for a small group of users may surface network anomalies that would not typically attract notice until a more widespread service delivery impact occurs.

Predict Optical Span Loss Before it Impacts Transmission Quality

In another example, it’s possible to predict optical span loss in an optical line system. Time series databases provide the wide data required to create time series summaries that increase the accuracy of failure predictions. Microapplications using span loss data can future-cast defined threshold points that trigger automated remediation before transmission quality is impacted.

To learn more about building your own proactive optical network, watch this two-minute video.

“By 2022, 70% of all organizations will accelerate their use of digital technologies, transforming existing business processes to drive customer engagement, employee productivity, and business resilience,” according to IDC FutureScape: Worldwide Cloud 2021 Predictions. That means intelligence everywhere, all the time. How will your business use it?