And How Synchronization Requirements are Evolving

Networks are made up of equipment in different locations, communicating with each other. Think of an orchestra – the conductor is the master clock, and all the musicians play their instruments (equipment) in time with the conductor and hence with each other. A harmonious melody results. Without the conductor some musicians will play faster and others slower, and over time the harmony drifts into cacophony.

Synchronization Basics: Frequency, Phase, and Time of Day

Frequency is how often something happens: in music, ¾ time means three beats per measure, 4/4 time means four beats per measure. In communications terms this is the frequency of a signal. For two nodes to communicate, they must operate at the same speed. A 100 GbE port on a router cannot be connected to a 400 GbE port on another router.

Phase relates to the point in time when the notes land. For a waltz the notes land on the beat with rhythmic regularity, while in jazz the notes are played sometimes before, sometimes after the beat. Frequency is the beat, and phase is the timing of the notes. In communications, variations in phase and frequency in known as jitter and it has negative effects in networks.

Time of Day is just that – the time you see when you look at a clock. If the concert starts at 8pm but the lead violinist shows up at 9pm because of a slow watch, then you might not be happy and demand your money back.

Telecom Network Synchronization

Since the advent of TDM (Time Division Multiplexing), synchronization has been at the heart of telecom networks. One node was synchronized to a master clock, and other nodes derived their sync from the links connected to that node. If sync was lost, frame slips would occur, resulting in data loss. This method of distributing the master clock throughout the network continued as links were upgraded to SONET/SDH speeds. Today, networks and their synchronization requirements have evolved – a topic I will explore further in this blog series.

Power Grid Synchronization

The electrical grid is the network of power generation stations. All power stations on the grid must be synchronized in frequency (50/60Hz) and phase, with the positive and negative polarities oscillating in unison. Otherwise power surges, ripples, and other disruptions can cripple the grid.

The Fall and Rise of Network Distributed Synchronization

Old timers, like myself, who have spent more than twenty years in the optical industry will remember that the transport and distribution of synchronization used to be integral to the network. The “S” in SONET and SDH in fact stands for Synchronous, and this was embedded in the protocols and the equipment.

As the network evolved towards Ethernet flows being transported over OTN and DWDM, the reference network architecture was simplified and synchronization functionality at the optical transport layer was largely eliminated. Today, “the old is new again” as network operators once again face the need to transport a synchronization reference signal – this time with stricter and additional requirements.

5G and Time Division Duplexing (TDD)

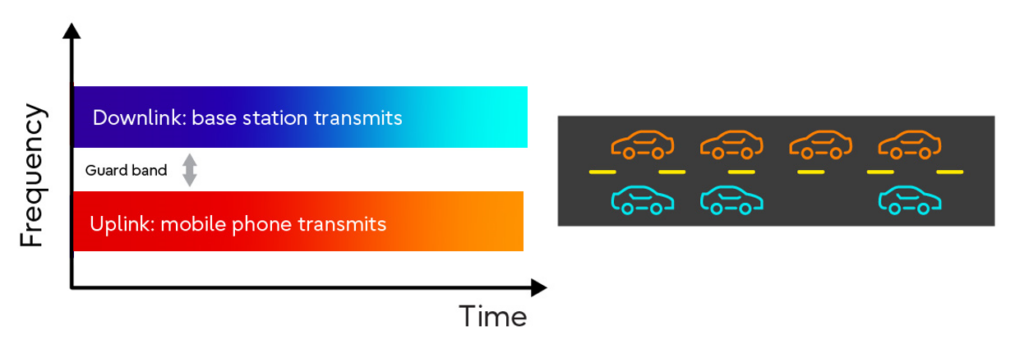

One of the drivers behind this re-emerged requirement is synchronization for 5G networks and its use of Time Division Duplexing (TDD). Previous generations of mobile networks used separate frequency bands, or Frequency Division Duplexing (FDD) for the transmit and receive signals. This approach is analogous to a two-lane road where cars traveling in each direction have their own dedicated lanes, and the traffic is continuously flowing.

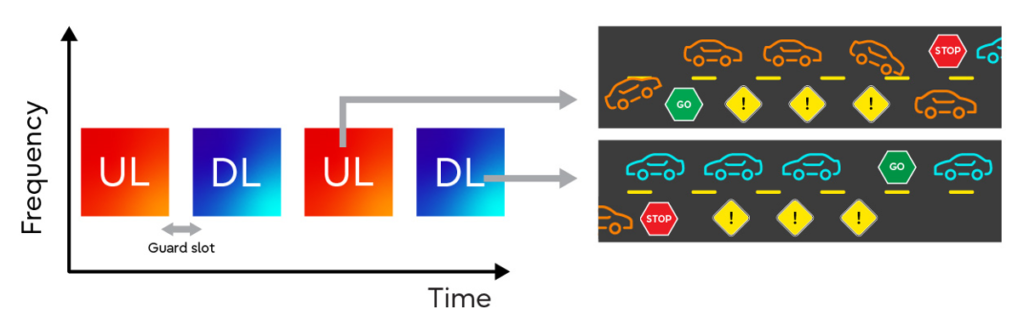

TDD uses a different approach: signals occupy the same frequency band, but transmit and receive signals are separated in time, i.e. they take turns talking. This is analogous to traffic flow in a construction zone when the road is reduced to a single lane. A worker on each side of the single lane must signal the cars to stop or go – and they must be synchronized with each other to ensure proper traffic flow. This is where the need for an accurate timing source, available at both ends of the connection becomes critical to the integrity of the 5G wireless connection.

Access to a Master Clock Reference Signal

Most infrastructure requiring timing use the Global Positioning System’s (GPS) reference timing signal. Onboard each satellite is a high precision cesium atomic clock – the “master” clock whose signal is broadcast from space. On land, a GPS clock decodes and locks on to this signal, and it becomes the reference clock to the local network equipment it is connected to. In turn, the network may distribute this timing to downstream nodes. GPS clocks deliver high precision at relatively low cost, and hence are widely deployed particularly at cell towers. However getting a good reference signal requires an unobstructed line-of-sight to multiple GPS satellites, which is not always feasible. GPS signals can be degraded by fading as well as nefarious activities such as jamming or spoofing. Jamming is on the rise, with the market for anti-jamming devices reportedly growing at 11% annually.

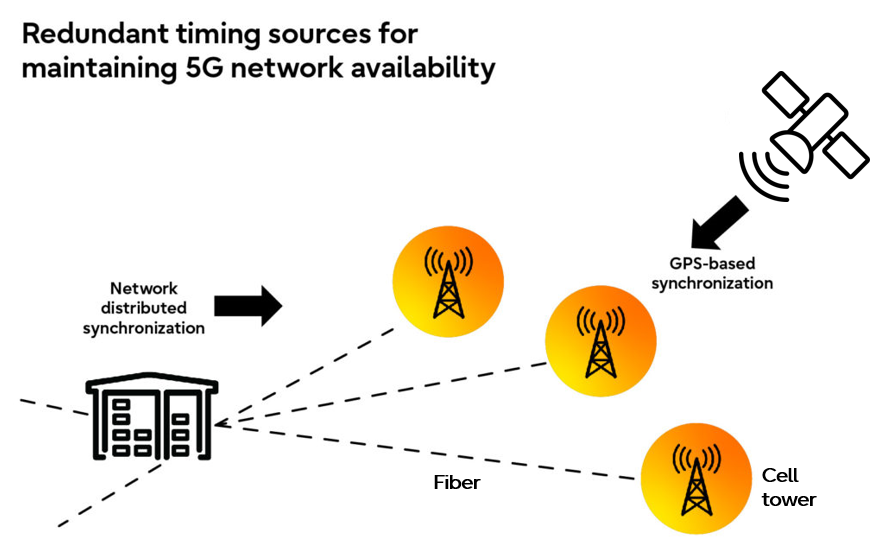

This is why utilities and communication providers need a backup. In addition to a local GPS clock, they also transport and distribute a timing reference signal over the network.

The picture below depicts a typical architecture where the primary timing reference signal for the cell towers is the GPS signal. A secondary source – the network distributed signal – is transmitted to each cell tower over fiber and used by the base station radios if the GPS clock fails.

Timing Reference Signal Transport

Stay tuned for my next blog in this short series. will focus on the requirements related to the transport of an accurate and reliable reference signal over the optical network. Transport of this type of signal needs to be carefully engineered and must adhere to strict requirements in order to guarantee the signal’s accuracy.