Examining AI security threats

Artificial Intelligence (AI) is transforming industries and enhancing our daily lives, reshaping how businesses operate and how people interact with technology. From automating complex processes to making real-time decisions, AI has proven to be an invaluable tool across multiple sectors. However, with this rapid adoption comes a host of security risks, including data privacy concerns, adversarial attacks, and AI-driven cyber threats. Organizations must be proactive in addressing these challenges to prevent AI security from becoming a vulnerability rather than an asset.

Understanding AI’s architecture, potential security threats, and the best mitigation strategies are essential for ensuring a safe and responsible AI-driven future. This article explores AI’s foundational components, common AI security risks, existing security controls, industry standards, regulations, and global efforts to ensure AI remains a force for good while minimizing potential dangers.

Types of AI and real-world applications

AI refers to the simulation of human intelligence in machines that can perform tasks such as learning, reasoning, problem-solving, and decision-making.

What are the different types of AI?

- Narrow AI (Weak AI): Performs specific tasks (e.g., chatbots, recommendation systems)

- General AI (Strong AI): Can perform any intellectual task a human can do

- Super AI: Hypothetical future AI surpassing human intelligence

What are the business benefits of AI?

- Enhanced automation and efficiency

- Improved customer experience

- Data-driven decision-making

- Cost reduction and operational improvements

What are the real-world applications for AI?

- Communications: AI is used in automated customer support (chatbots), sentiment analysis, and real-time language translation

- Automated tech issue resolution: AI-driven systems help detect and resolve IT issues proactively, reducing downtime and improving system performance

- 5G, 6G, and Open RAN: AI optimizes network operations, manages spectrum efficiency, and enhances predictive maintenance in next-generation wireless networks

- Healthcare: Disease diagnosis, robotic surgeries

- Finance: Fraud detection, algorithmic trading

- Retail: Personalized recommendations, demand forecasting

- Transportation: Autonomous vehicles, traffic management

- Cybersecurity: Threat detection and response

The architecture and operations of AI

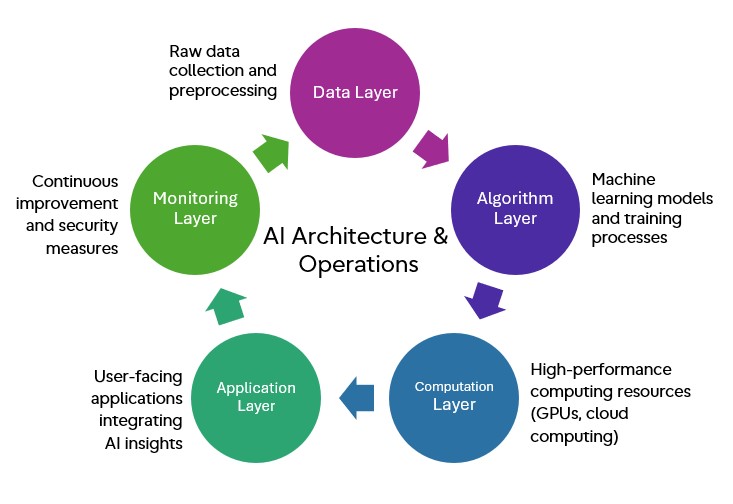

AI systems consist of multiple interconnected layers that ensure efficient functionality and security. At the core of AI operations lies the Data Layer, responsible for collecting raw data from various sources and preprocessing it to remove inconsistencies. This refined data is then passed to the Algorithm Layer, where Machine Learning (ML) models are trained and optimized to identify patterns and make predictions. Next, the Computation Layer provides the necessary high-performance computing resources, such as GPUs and cloud-based processing, to support AI workloads. The Application Layer utilizes these trained models to deliver AI-driven insights and services to end-users through various platforms and interfaces. Finally, the Monitoring and Feedback Layer plays a crucial role in continuous improvement by evaluating AI performance, identifying potential security vulnerabilities, and implementing updates to enhance functionality and safety.

Figure 1: The architecture and operations of AI

Security threats imposed on AI architecture and operations

What are the top AI security threats?

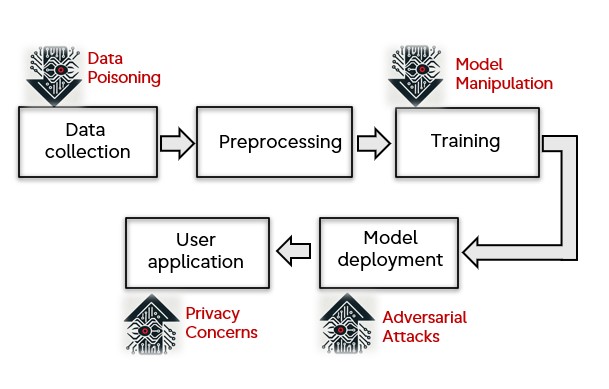

AI systems face a range of security threats that can compromise their integrity and effectiveness. Data poisoning occurs when malicious actors manipulate training data to corrupt AI models, leading to inaccurate predictions and biased outcomes. Model inversion attacks pose another serious risk by extracting sensitive information from AI models, potentially exposing confidential data. Adversarial attacks exploit vulnerabilities in AI systems by modifying input data in a way that deceives the model into making incorrect decisions. Additionally, AI-driven decision-making can suffer from bias and discrimination, leading to unfair outcomes and ethical concerns. AI-powered cyberattacks enhance traditional cyber threats by automating hacking techniques and improving their effectiveness. Lastly, privacy breaches remain a significant challenge, as AI systems often process vast amounts of personal data, increasing the risk of unauthorized access and exposure.

- Model inversion attacks: Extracting sensitive information from AI models

- Adversarial attacks: Manipulating input data to deceive AI systems

- Bias and discrimination: Unintended biases in AI decision-making

- AI-powered cyberattacks: Using AI to enhance cyber threats

- Privacy breaches: Unintended exposure of sensitive user data

Figure 2: Key AI security threats

What controls are in place to address AI security threats?

| Security threat | Security control | Control description |

| Data poisoning | Data validation and anomaly detection | Ensures that training data is verified for integrity, preventing the introduction of maliciously manipulated data |

| Model inversion attacks | Differential privacy techniques | Adds noise to sensitive data, ensuring privacy while still enabling AI model training and predictions |

| Adversarial attacks | Robust model training methods | Enhances AI resilience by training models to recognize and resist manipulated or adversarial inputs |

| Bias and discrimination | Ethical AI and fairness auditing | Implements auditing and testing frameworks to identify and mitigate unintended biases in AI decision-making |

| AI-powered cyberattacks | AI-driven threat intelligence | Uses AI-powered cybersecurity tools to detect, prevent, and respond to AI-driven cyber threats |

| Privacy breaches | Federated learning and encryption | Protects user data by enabling secure data processing across decentralized networks without exposing raw data |

Figure 3: Controls to address AI security threats

Why are AI security standards important?

AI security standards are essential for ensuring the safe and ethical deployment of Artificial Intelligence. They provide a structured approach to risk management, data privacy, and bias mitigation, helping organizations build trust and accountability in AI systems. Adhering to these frameworks enhances security, ensures regulatory compliance, and mitigates vulnerabilities that could compromise AI integrity. By implementing these standards, businesses can foster responsible AI innovation while safeguarding users and stakeholders from potential threats.

What are notable AI security standards?

- ISO/IEC 42001: AI management system standards

- NIST AI Risk Management Framework (AI RMF): AI risk mitigation guidelines

- IEEE P7000 Series: AI ethics and security

- GDPR & AI: Data privacy implications on AI operations

What are governments doing to safeguard AI?

Governments around the world are investing in AI security research through funding programs and grants. These initiatives support the development of advanced threat detection mechanisms, AI-driven cybersecurity tools, and privacy-preserving technologies. By promoting collaboration between academia, industry, and regulatory bodies, these grants help accelerate innovation while ensuring AI systems remain secure and trustworthy.

- AI policy development: Creating ethical guidelines and AI governance frameworks

- Cybersecurity enhancements: Funding AI security research and workforce development

- International AI alliances: Collaborations between nations to ensure AI safety

- Regulatory sandboxes: Testing AI applications under controlled conditions

What are the current AI regulations for businesses?

As AI technology continues to evolve, governments worldwide are implementing regulations to ensure its responsible use. These regulations focus on ethical considerations, data privacy, and accountability, ensuring AI systems operate transparently and fairly. Businesses must comply with these evolving legal frameworks to mitigate risks, build consumer trust, and avoid regulatory penalties. By adhering to AI regulations, companies can ensure their AI solutions align with legal and ethical standards while fostering innovation in a secure and controlled environment.

- European Union AI Act: Establishes AI risk classification and compliance requirements

- U.S. Executive Order on AI: Guidelines on AI development and use

- China’s AI Governance Initiatives: AI development policies and security mandates

- OECD AI Principles: Global AI governance recommendations

AI for peace, prosperity, and wealth

AI has the potential to drive positive global change by:

- Enhancing healthcare: Early disease detection, personalized treatments

- Promoting education: AI-powered tutoring and personalized learning

- Economic growth: Job creation in AI-driven industries

- Sustainability: Climate change predictions, energy efficiency optimizations

As AI continues to evolve, its responsible and secure deployment will be critical in shaping a better future. By prioritizing ethical AI development, strong security measures, and global cooperation, we can harness AI’s power to foster innovation, improve quality of life, and build a more prosperous and equitable world for future generations.