Open Radio Access Networks (O-RANs) have been getting a lot of attention from Mobile Network Operators (MNOs) over the past few years. Some MNOs are evaluating which solutions are best for their networks, while others are already planning upgrades to existing O-RAN solutions. When evaluating Central Unit/Distributed Unit (CU/DU) options, they most commonly consider three key factors:

- Virtualization

- Energy efficiency

- Capacity

Beyond these three factors, MNOs are already beginning to raise the bar by considering future applications. Many MNOs today are deploying 5G primarily for its higher capacity, so that they can offer higher speeds to consumers and claim a marketing advantage. But 5G was also developed to support low latency, and the industry has so far been slow to take advantage of it. One challenge with providing low-latency applications has been the need to deploy edge computing economically. Until now, MNOs have had to consider deploying an extra server if they wanted edge computing capabilities, which typically requires a lot of extra capex, power, and space. One area of potential opportunity for them is investigating latency-sensitive applications and which RAN solutions will best support xApps or AI capabilities. Now, Fujitsu is working with NVIDIA to develop a solution that brings power savings, reduced ownership cost, advanced traffic management, and AI-based optimization to Fujitsu’s O-RAN virtual Central Unit/Distributed Unit (vCU/DU). This 5G vCU/DU solution will offer key performance boosts and cost-effective features in a single box, providing MNOs the ability to add xApps and RAN Intelligent Control (RIC) functionality without the need for additional hardware.

Cloud-native solution provides O-RAN DU software and supports edge AI applications and xApps on the same converged accelerator card

The solution consists of Fujitsu’s virtualized O-RAN product suite, which builds on NVIDIA’s converged accelerator platforms. The solution includes a virtualized Central Unit (vCU) and Distributed Unit (vDU), combined with the NVIDIA Aerial SDK, comprehensive AI frameworks, and the NVIDIA A100X converged accelerator, as well as Wind River’s Wind River Studio Cloud Platform.

The high-performance, energy efficient AI-enabled vDU has inline accelerator capabilities and integrated edge computing power—on the same card in a single server. A single server that can host multiple mobile network functions has clear value for operators. But MNOs can also use it to generate new revenue streams as part of an enterprise offering.

Dynamic Resource Allocation and vRAN autoscaling based on capacity demand

The key drivers of the AI-enabled vCU/DU solution’s features are the Dynamic Resource Allocation and vRAN Autoscale capabilities, which can be implemented at the processor or network element level using the NVIDIA converged accelerator.

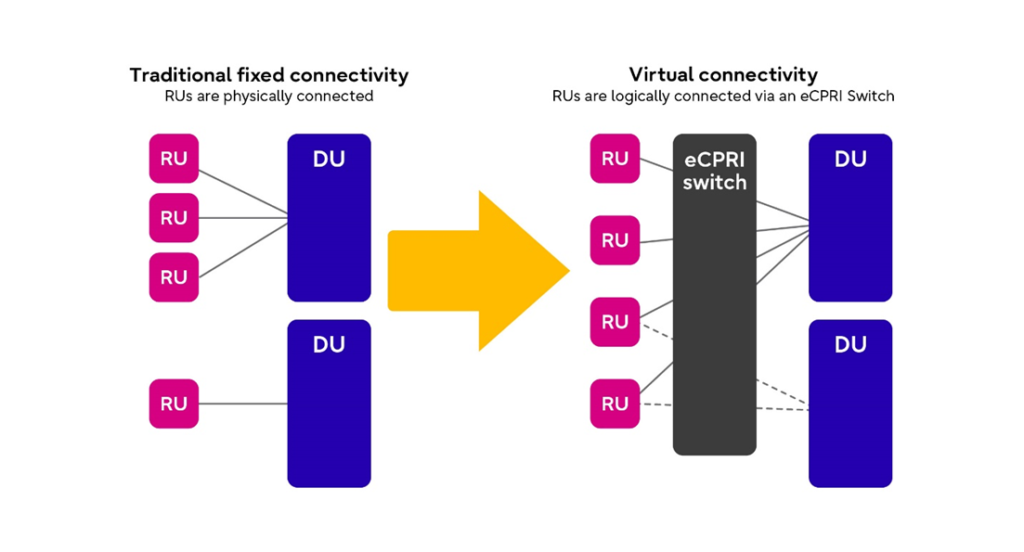

Dynamic Resource Allocation uses AI/ML to predict RU traffic, anticipate peaks and troughs in traffic volume, and assign Radio Units (RUs) to Distributed Units (DUs) dynamically via an eCPRI switch (see Figure 1). As a result, RUs can be consolidated onto a smaller number of DUs, and any idle DUs can be temporarily deactivated to reduce power consumption during low-traffic periods. These inactive DUs are then reactivated when capacity demand increases. Dormant DU capacity that can be software activated on demand also improves overall system reliability.

High availability

Continued operation in case of DU failure (inter-DU redundancy) enables flexible assignment of RUs to other DUs when needed, such as in the event of a DU failure.

Figure 1: Dynamic Resource Allocation

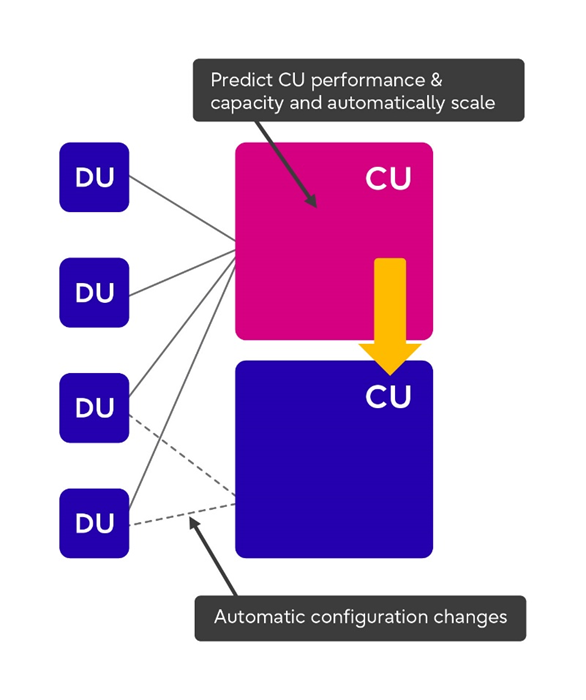

vRAN Autoscale (Figure 2) operates in a similar manner by dynamically assigning DUs to CUs in the same way as Dynamic Resource Allocation. In both cases, AI technology anticipates changes in traffic patterns and uses this data to predictively scale capacity.

Figure 2: vRAN Autoscale

Multi-Instance GPU capability helps MNOs plan for growth

The NVIDIA accelerator platform’s optimization capability is the “secret sauce” behind the ability to deactivate and power off idle/unused cores in low-traffic conditions. Using NVIDIA Multi-Instance GPU (MIG) energy-wise partitioning technology, the converged card can partition the GPU into as many as seven instances, each fully isolated with its own high-bandwidth memory, cache, and compute cores. This capability can be very helpful to MNOs planning for growth but that may not have a lot of traffic initially. By activating just a few of the cores, the MNO can minimize OPEX while retaining the option to increase processing capacity automatically as they grow, and as traffic demands increase.

Industry-leading performance and capacity

Fujitsu’s vDU design uses much more frequent communication with the accelerator card than competitors’ implementations. This more frequent communication with the accelerator increases performance, which affords the following benefits:

- Increased capacity (measured in cells per DU)

- Increased latency budget

Together or in combination, the increased capacity and latency budget are highly beneficial, for instance by enabling RUs to be deployed at greater distances from the DU.

The benefits of an AI-enabled vCU/DU

By combining vCU/DU accelerator processing and AI edge processing on a single converged accelerator card, the Fujitsu AI-Enabled vCU/DU offers some significant benefits to MNOs as they roll-out or enhance their 5G service delivery capabilities.

- Since this is a virtual CU/DU solution, cost is reduced because it can be upgraded via remote software upgrade only, rather than by deploying new hardware and/or visiting the site.

- Adding low-latency edge applications require no additional edge hardware, which reduces both capex and opex for these applications.

- The solution only needs a single accelerator card per server. Less hardware means capex savings, reduced power consumption, and a smaller overall footprint.

- Overall network performance gets a boost from the increased frequency of interaction with the accelerator, which can be used to increase capacity and reduce demands on the transport latency budget.

- The solution offers industry-leading density in terms of the number of cells that can be assigned to a DU in a single 2U server.

- The increased accelerator performance enables MNOs to deploy radios up to 66% farther away from the DU, which is ideal for centralized RAN deployments or for more flexible RU deployment where site footprint is limited or constrained.

- Dynamic resource allocation frees up computing capacity for other purposes.

- Advanced traffic management is facilitated by the ability to predict high- or low- traffic times and provide capacity or redundancy whenever needed.

- Locating computing power at the network edge is optimal for AI capabilities, processing resources to support xApps, and low-latency applications.

The sustainability story: Fujitsu’s solution powered by NVIDIA helps you “reduce, reuse, & recycle”

Three main principles have guided sustainability initiatives for decades: reduce, reuse, and recycle. Fujitsu has innovatively applied these principles to the 5G RAN using NVIDIA technology. First, we have developed a common approach to reduce power consumption by deactivating network elements and components temporarily if they are not needed. Second, we turn on and reuse network elements and components when traffic demand increases. Finally, and most importantly, by making the RAN completely virtual, we can recycle unused or underutilized edge computing capacity for AI, xApp or other latency-sensitive edge applications.

From an energy efficiency perspective, the AI-enabled vCU/DU reduces power consumption in the following ways:

- Dynamic Resource Allocation

- vRAN Autoscale

- Multi-Instance GPU (MIG) energy-wise partitioning

How the AI-Enabled vCU/DU solution reduces Total Cost of Ownership (TCO)

Fujitsu’s AI-Enabled vCU/DU built with NVIDIA’s converged accelerator offers multiple TCO benefits to MNOs considering low-latency application deployment on the edge. With this solution, the MNO can deploy both vCU/DU and edge applications on the same server and the same accelerator card, enabling them to:

- Reduce capex by avoiding the purchase of multiple servers and multiple accelerator cards at each site

- Save space by avoiding the deployment of multiple servers and multiple accelerator cards at each site

- Save power by avoiding the operation of multiple servers and multiple accelerator cards at each site

- Reduce management overhead by having fewer network elements to manage

Future potential and possibilities

The AI-Enabled vCU/DU solution and its accelerator capabilities combine the high speed and low latency of 5G with on-board AI capabilities to provide real-time video analytics and extended reality (XR) rendering. This opens up many possible uses of the NVIDIA accelerator platform’s resources as a result of higher compute resource availability. These excess compute resources can serve a wide variety of purposes:

- Latency-sensitive mobile networking applications like xApps

- AR/VR applications such as AR-assisted installation or maintenance

- Other enterprise edge applications

MNOs have focused on the capacity benefits of 5G, leaving the latency benefits largely unaddressed. With solutions like the Fujitsu AI-Enabled vCU/DU, Mobile Network Operators are now well positioned to generate new revenue streams by leveraging their ultra-reliable, low latency communication (URLLC) 5G networks to offering or enable applications where immediate action is the difference between success and failure.